Hoyoung Pak

Chicago

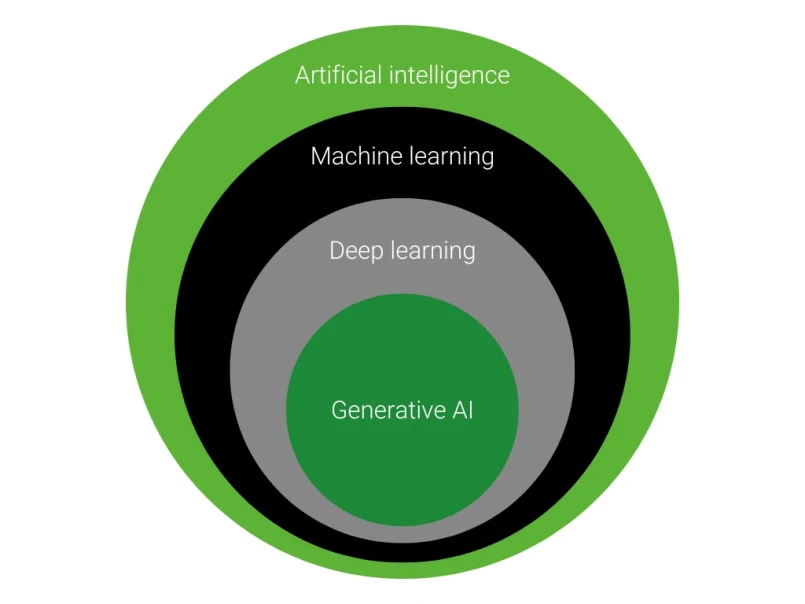

AI is profoundly reshaping the very fabric of business operations and competition. These technologies offer unprecedented opportunities for optimizing efficiency, fostering innovation, and driving sustainable growth.

Understanding how AI works can no longer reside solely with the tech team.

Today, it's a strategic imperative for CEOs and other senior leaders to fully grasp the terminology, their attributes, and how these technologies work together. This is the only way to make the right investments, ask the right questions, and ultimately unlock its full potential for the organization.

While many technical explanations on AI exist, we are sharing our answers to the most common questions we get asked by C-suite executives to help you understand the technology in practical business terms.

ML and generative AI can drive business value in several ways:

By leveraging ML and GenAI to drive automation, improve decision-making, enhance customer experiences, innovate, predict future trends, and manage risks, businesses can gain a competitive edge, optimize operations, and unlock new growth opportunities.

Senior leaders play an important role here, which is to ensure that AI projects align with a company's value proposition and advance its strategy. A low-cost producer will want to emphasize automation, efficiency, and similar activities; a company whose value proposition centers on customer experience will have different priorities and should pursue different projects.

The primary tasks of ML can be broadly categorized into several key areas, each with distinct applications that drive business value:

GenAI encompasses several capabilities that have implications for business innovation, content creation, and operational efficiency:

While AI can be a powerful tool in various business contexts, there are situations where it may not be the best choice. Here are a few examples:

ML learns in two basic ways:

There are variations and hybrids. One interesting hybrid is "reinforcement learning" where the algorithm, or agent, learns to make decisions by performing actions and receiving feedback in the form of rewards or penalties. Through an iterative trial and error process, the algorithm gets progressively better at obtaining rewards.

The "black box" nature of ML and GenAI refers to the difficulty in understanding how these models make decisions or generate outputs, due to their complex and often opaque algorithms. This can pose challenges in terms of trust, accountability, and compliance.

To address this, there is a growing field of explainable AI (XAI) that aims to make the decision-making processes of AI systems more transparent and understandable. Additionally, collaboration between data scientists and business leaders can help in developing clearer guidelines and explanations for AI behaviors. Regularly reviewing and auditing AI models for fairness, bias, and accuracy also contributes to demystifying the black box, ensuring responsible and ethical AI use.

Ethical considerations in using ML and GenAI span several key areas, reflecting the importance of responsible development and deployment:

Addressing these ethical considerations involves a multidisciplinary approach, incorporating legal, technical, and ethical expertise. By proactively engaging with these issues, businesses can lead in the responsible use of ML and GenAI, fostering trust and promoting a positive societal impact.